Tag: values

-

chat 2025-08-08

A collaborative project?

Transcript of a chat between Alec Fearon and ChatGPT 5 on 9 August 2025

Alec said:

<reference>Marjon University is interested in a collaboration with Anapoly. The collaboration promises to extend the capability of both organisations for the social good. <reference>Students are among those most at risk from the misuse of AI. <reference>There is a risk that students might outsource their thinking to AI and lose or not acquire the ability for critical thinking. <principle>There is a need to imbue students with values of benefit to society. <principle>Students need to have an ethos of caring about how the human-AI relationship evolves. <process>An LLM can be configured to support students to become wiser. <concept>Anapoly Notebook will have an area in which philosophical ideas can be explored. <task>Discuss

ChatGPT said:

Here’s how the elements you’ve listed fit together, and where the “LLM to support wisdom” question becomes central.

1. Collaboration premise

Marjon University and Anapoly are both interested in AI for social good.

- Marjon brings access to students, an at-risk group in terms of AI misuse.

- Anapoly brings structured experimentation methods and public transparency.

- The shared value space is about enhancing capability without eroding human judgement.

2. Risk frame

- Students may “outsource” their thinking to AI, which risks weakening critical faculties.

- The misuse risk is not just about plagiarism or misinformation — it’s about habits of mind.

- In your own frameworks, this parallels the acclimatisation → experimentation → proof-of-concept → iteration lab stagesLab Framework v03, but with a moral and developmental overlay.

3. Guiding principles

From your notes:

- Value formation: embed values that benefit society, not just skills for employability.

- Ethos of stewardship: care about the human–AI relationship as something to be shaped, not passively accepted.

These align with NIST’s idea that human-AI interaction should be goal- and outcome-oriented and that context of use (technical, social, cultural) is part of the design spacetaxonomy NIST.AI.200-1.

4. Process implication — “LLM to support wisdom”

If the LLM is to support students in becoming wise rather than just becoming efficient, configuration needs to be deliberate. That could mean:

- Prompt scaffolds that force reflection before answer consumption (e.g., “What reasoning steps did you take before consulting me?”).

- Bias and value checks embedded in interactions.

- Structured content that guides the AI to emphasise principles, trade-offs, and ethical consequences, not just factual answersStructured Content (Not….

- Review stages (your Lab Framework’s “Review” and “Wrap-up”) where human participants articulate learning and value implications, not just outcomesLab Framework v03.

5. Conceptual integration — Anapoly Notebook’s philosophical area

- The philosophical space could act as a sandbox for wisdom-support configurations.

- It would host experiments in value-aware prompting, critical-thinking patterns, and ethics-in-context scenarios.

- Publishing these experiments — with your transparency labellingtransparency framework … and structured lab noteslab note structure – An… — could both demystify the work and invite peer contribution.

6. Discussion points for you and Marjon

- Scope of “wisdom” — Is it moral reasoning, long-term perspective, integration of knowledge, or something else?

- Measurement — How will we know if an LLM interaction genuinely promotes wiser thinking?

- Configuration approach — Do we embed guardrails and prompts in the LLM, or do we teach students to use general-purpose models in wise-making ways?

- Risk mitigation — How do we ensure “wisdom-support” does not become disguised persuasion or value-imposition?

- Sustainability — How will the philosophical area in Anapoly Notebook be maintained, curated, and used in teaching and research?

If you want, I can map these into a pilot lab design showing how to test “LLM-for-wisdom” with students under your existing Lab Framework, including setup, roles, and asset flow. That would make it operational rather than aspirational.

You said:

To begin with at least, I think I’d like the philosophical space to focus on the guiding principles of value formation and ethos. Indeed, we might perhaps call that space values and ethos. Please comment on that.

ChatGPT said:

Calling the space Values and Ethos is sharper and easier to grasp than “philosophical space.”

Advantages:

- Plain English — avoids sounding like an abstract academic corner.

- Signals purpose — makes it clear this is about normative grounding, not idle speculation.

- Invites participation — “values” and “ethos” are terms students and practitioners can relate to, even if they wouldn’t enter a “philosophy” section.

- Maps to your stated principles — it directly reflects the two you’ve prioritised: values of benefit to society and caring about the human–AI relationship.

Potential refinements:

- Decide whether this space is exploratory (asking “what values should guide us?”) or declarative (asserting “these are our values”). The tone and format will differ.

- Consider whether to frame it as Values & Ethos in Human–AI Interaction — that ties it directly to your mission and helps prevent it from drifting into generic moral commentary.

- If it becomes a standing area in Anapoly Notebook, align it with your lab note structure and transparency framework so that each entry is both open-ended and methodically documented.

If you want, I can outline a content template for Values & Ethos posts that keeps them grounded, repeatable, and linked back to your lab process. That would stop it from becoming a loose collection of opinion pieces.

You said:

Yes, it will be the values and ethos in human-AI interaction. The end product will be a long-form essay, and the philosophical space, the values and ethos space, will be the home for a project to develop that long-form essay. My feeling is that this might fit the academic approach that the university might like. It could be something that students would benefit from participating in. What are your thoughts about all this?

ChatGPT said:

I think you’re right — framing it as a project in the Values & Ethos space with the goal of producing a long-form essay does several useful things.

Why it works for Marjon and students:

- Academic compatibility – A defined research-to-publication pathway will feel familiar in a university setting. It echoes dissertation or capstone work, but in a collaborative and experimental format.

- Student engagement – Gives them a stake in shaping the final product, rather than just reading about it. They’d contribute insights, literature reviews, use-case critiques, and possibly their own “values tests” of LLM interactions.

- Structured rigour – If you wrap it in your Lab FrameworkLab Framework v03, you get planning, operation, review, and wrap-up phases. That produces an audit trail and deliverables Marjon can reference.

- Ethical literacy – Students don’t just hear “AI has risks”; they practise articulating what values matter and why, and apply them to concrete human–AI interaction scenarios.

How I’d suggest shaping it:

- Working Title – Values & Ethos in Human–AI Interaction: A Collaborative Inquiry.

- Method – Treat it as a series of mini-labs inside the project, each exploring one principle (e.g. transparency, reciprocity, autonomy).

- Roles – Facilitator (Anapoly), Explorers (students), Quality & Trust Steward (faculty or Anapoly lead to ensure coherence and academic integrity).

- Assets – Each mini-lab produces a lab notelab note structure – An…, feeding into sections of the final essay.

- Transparency – Apply your existing classification labelstransparency framework … so readers see where AI contributed to the thinking or drafting.

Benefits:

- For Marjon: a tangible, citable output demonstrating responsible AI pedagogy.

- For students: co-authorship or acknowledgment in a public, high-quality piece of work.

- For Anapoly: a tested format for running “wisdom-support” labs in other contexts.

If you like, I can map this into a Values & Ethos project plan using your Lab Framework so you can present it to Marjon as both academically respectable and operationally concrete.

You said:

We must remember that the long-form essay, the end result, is important in its own right and is a key purpose for the work. Equally important is the fact that we’re using that end product as a vehicle for learning how to make use of AI in a real-life setting, which was, of course, the original purpose of Annapolis AI Labs.

ChatGPT said:

Yes — that dual purpose is the strength of it, and it’s worth making explicit from the outset:

- The essay as a scholarly contribution

- Stands alone as a thoughtful, well-researched piece on Values & Ethos in Human–AI Interaction.

- Has lasting value for Marjon, Anapoly, and a wider audience.

- The essay as a vehicle for applied AI learning

- Every step — research, drafting, review — becomes a live case study in how to work productively and critically with AI.

- Students and faculty experience the Lab Framework in action, not just as a concept.

- Transparency labels and lab notes document how AI contributed, creating a meta-layer of insight.

If you frame it this way when pitching to Marjon, you avoid the trap of the essay being seen as just an “output” or the process being seen as just “teaching AI skills.” You’re showing how a real intellectual task can be both:

- a product worth publishing, and

- a process worth replicating in other academic or professional contexts.

I’d suggest we write the project brief with two explicit objectives:

- Produce a long-form essay that is a credible academic/public contribution.

- Demonstrate and document the effective, ethical use of AI in a collaborative research and writing process.

If you want, I can draft that two-objective project brief in your Lab Framework style so it’s ready to drop into a Marjon proposal. That way, they see the scholarly goal and the applied-learning goal as equal, deliberate parts of the design.

You said:

What is the overarching aim, goal or purpose under which these two objectives sit?

ChatGPT said:

The overarching purpose could be framed like this:

To explore and model how collaborative, transparent use of AI can produce work of lasting intellectual and social value.

That umbrella is broad enough to cover:

- the content aim — producing a serious, credible essay on Values & Ethos in Human–AI Interaction, and

- the process aim — learning and demonstrating effective AI-assisted research and writing in a real-world, academically relevant project.

It also ties straight back to Anapoly AI Labs’ founding purpose: using genuine, meaningful tasks to learn how to work with AI in ways that are both competent and principled.

If you’d like, I can suggest two or three alternative phrasings so you can choose one that works both for an academic audience and for your own internal framing.

You said:

Yes, that will be helpful. Please do suggest some alternative phrasings.

ChatGPT said:

Here are three variants, each with a slightly different emphasis but covering both the essay and the applied-learning dimension:

- Exploratory emphasis

To investigate how values and ethos can shape human–AI interaction, while demonstrating in practice how AI can be used collaboratively and transparently to create work of lasting academic and social worth. - Applied-learning emphasis

To use the creation of a substantive essay on values and ethos in human–AI interaction as a live, documented case study in effective, ethical, and collaborative use of AI tools. - Mission-aligned emphasis

To model the Anapoly AI Labs approach — learning through real work — by producing a long-form essay on values and ethos in human–AI interaction that stands as both a credible contribution in its own right and a demonstration of best practice in applied AI collaboration.

Would you like me to also align your two objectives under the one you choose, so the whole structure reads as a coherent project statement?

You said:

Let me add this reference information into your context. Peer Assisted Learning (PALS), an initiative providing peer to peer support for students, will create an AI Support Team whose members will take part in the values and ethos project. Their wider remit will be to build a community of interest around ethical AI use.

ChatGPT said:

Updated saved memory

Noted — that gives the project an immediate operational base and a built-in community element. It also means the learning objective can extend beyond the essay team into PALS’ broader peer-to-peer network.

You said:

Turning back to purpose, the item three, the mission-aligned emphasis, is not appropriate. One and two are both good in their separate ways, but I would like to see if we can’t merge them in some fashion. What can you suggest?

ChatGPT said:

Here’s a merged version that keeps the intellectual focus of (1) and the applied-learning clarity of (2):

Proposed merged purpose

To investigate how values and ethos can shape human–AI interaction, using the creation of a substantive long-form essay as both a credible academic contribution and a live, documented case study in the effective, ethical, and collaborative use of AI tools.It keeps:

- The why — exploring values and ethos in human–AI interaction.

- The what — producing a substantial essay with academic credibility.

- The how — framing the work as a real-world, documented AI collaboration that others can learn from.

Would you like me to also condense this into a one-sentence “headline” form for use at the very top of the project brief? That can help keep everything anchored.

You said:

Rather than simply investigate how values and ethos can shape human-AI interaction, I want us to propose a framework of values and ethos which can do that.

ChatGPT said:

Then the merged purpose could be tightened to reflect that generative intent:

To propose a framework of values and ethos to guide human–AI interaction, using the creation of a substantive long-form essay as both a credible academic contribution and a live, documented case study in the effective, ethical, and collaborative use of AI tools.

That signals:

- Not just exploration, but delivery of a structured framework.

- A tangible scholarly output (the essay) as the vehicle.

- The process-as-learning dimension stays intact.

If you want, I can also work up a short variant — something you could put in a slide or pitch to Marjon — that keeps the same meaning but is under 20 words.

You said:

No, I don’t like that. Go back to the previous purpose and simply replace the word investigate with the word propose.

ChatGPT said:

Understood. That gives us:

To propose how values and ethos can shape human–AI interaction, using the creation of a substantive long-form essay as both a credible academic contribution and a live, documented case study in the effective, ethical, and collaborative use of AI tools.

It keeps the original structure you liked, but makes the intent to offer something constructive — not just analyse — explicit.

You said:

It’s good. Please put it into a canvas with the title Purpose.

ChatGPT said:

Purpose

To propose how values and ethos can shape human–AI interaction, using the creation of a substantive long-form essay as both a credible academic contribution and a live, documented case study in the effective, ethical, and collaborative use of AI tools. -

Shaping Human-AI Interaction

Shaping Human–AI Interaction

An exploration of how values, ethics, and ethos can shape human-AI interaction

Authors: Alec Fearon in collaboration with ChatGPT, Perplexity and NotebookLM

It is being written in the form of an evolving, long-form essay, the first item in what might become Anapoly’s “digital garden” (see Maggie Appleton’s excellent explanation of this concept).

Started 9 August 2025, last updated 9 August 2025

Background

On 7 August 2025, Anapoly ran a trial “Acclimatisation Lab“. One of the participants was G, a member of staff from Marjon University. He was impressed by Anapoly’s approach to the use of AI and, in later discussion, suggested the possibility of a collaboration with the university. After exploring some of the options for this, the conversation became a bit philosophical. It touched on the ethics of AI, the risk that students might outsource their thinking, the need to imbue students with values of benefit to society, and the need for them to have an ethos of caring about how the human-AI relationship evolves. This prompted Alec and G to begin thinking about the possibility of basing the collaboration on a project to explore these issues in more detail. Hence this essay.

Purpose

Source: Chat with ChatGPT

Goal

To propose how an educational establishment can inculcate the values, ethics, and ethos needed to shape responsible use of AI.

Outcomes

Academic output – A credible, well-researched essay proposing a framework for guiding human–AI interaction.

Applied learning – A live, documented example of using AI in a real intellectual task, showing good practice in configuration, transparency, and collaboration.

Approach

Peer to peer working

Collaboration with AI.

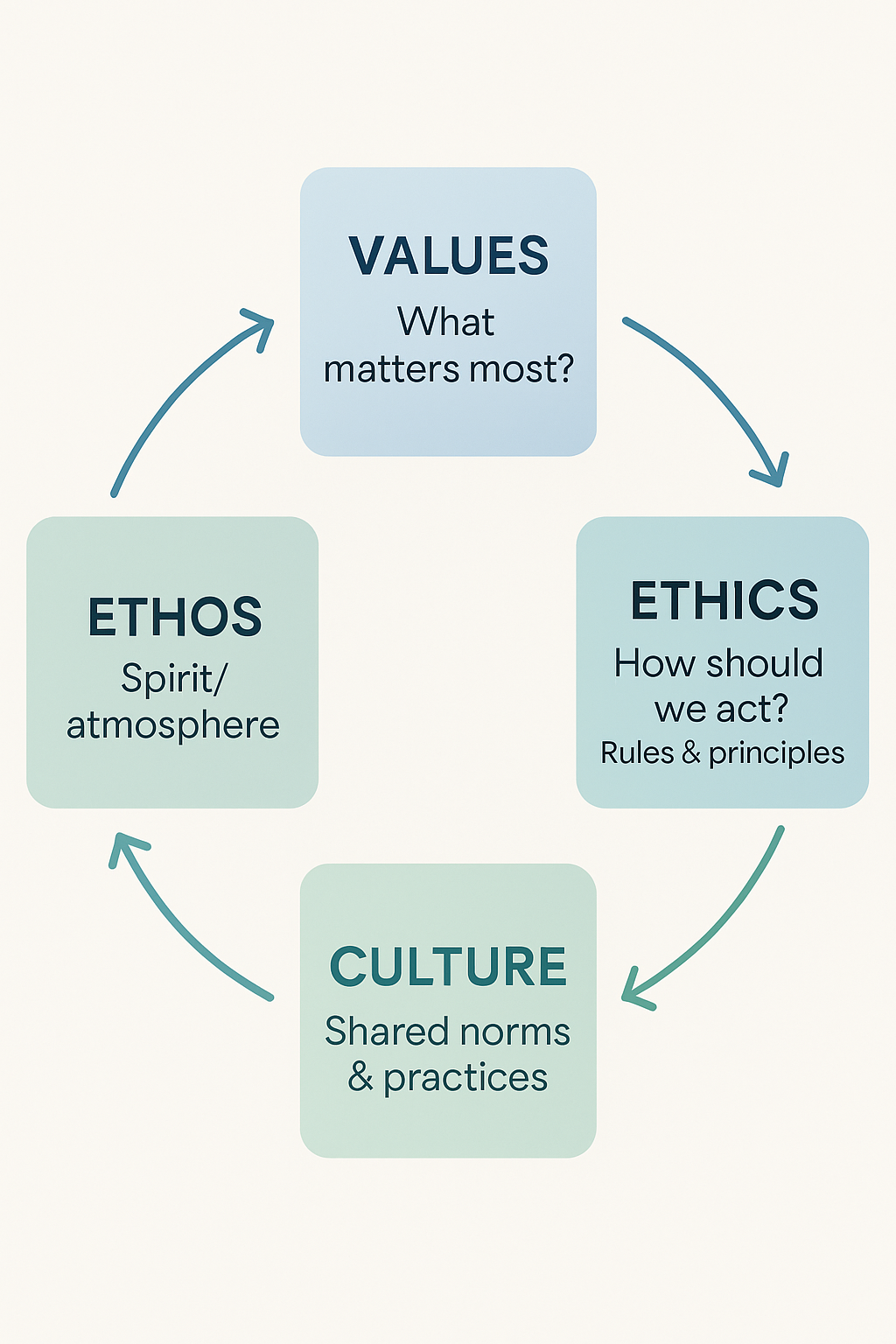

How Values, Ethics, Ethos, and Culture Connect

Source: Chat with Perplexity

At the foundation are values — the beliefs and principles about what is important, desirable, or right. They answer the question: What matters most to us? Values may be personal (honesty, curiosity), organisational (innovation, accountability), or societal (justice, equality). They are the moral “raw material” from which everything else flows.

Culture is the lived environment shaped by shared history, relationships, language, and traditions. It is how people actually live and work together over time. Culture naturally expresses shared values but also shapes them — reinforcing some, downplaying others. It develops whether or not there is any formal ethical guidance. When culture comes first, it carries implicit norms — unwritten but widely understood expectations of behaviour. These can act like an informal ethical framework, but they are not always coherent, inclusive, or consciously examined.

Ethics is where values become explicit, systematic guidance for behaviour. An ethical framework takes values (and sometimes external influences like laws and professional standards) and turns them into principles, rules, or reasoning methods. Ethics asks: Given our values, how should we act, and why? Formal ethics codifies expectations; informal ethics may be embedded in culture without being written down.

Ethos refers to the characteristic spirit or “feel” of a group — the lived expression of values within a culture. While ethics defines the rules and reasoning, ethos captures the atmosphere and collective character that emerges when values are embodied in daily life. Ethos is tangible in tone, traditions, and shared habits: e.g., a research lab where “integrity and curiosity” aren’t just rules, but felt in how people question, listen, and share.

Putting it together:

- Values are the starting point — our moral compass.

- Ethics is a reasoned map that guides how we apply those values in real situations.

- Culture is the social terrain where these values and ethics live, evolve, and sometimes clash.

- Ethos is the vibe or spirit of that culture when values are actively lived out.

-

chat 2025-08-09

Values, Ethics & Ethos

Transcript of a chat between Alec Fearon and Perplexity AI on 9 August 2025

Alec said

<reference>Colleagues in Anapoly Ltd seek to bridge the gap between mystery or curiosity about AI, and its practical use. We want to help professionals like us apply AI tools to real tasks in a hands-on, transparent way.

<reference>Marjon University is interested in the possibility of a collaboration with Anapoly. The collaboration promises to extend the capability of both organisations for the social good.

<reference>Students are among those most at risk from the misuse of AI.

<reference>There is a risk that students might outsource their thinking to AI and lose or not acquire the ability for critical thinking.

<reference>Anapoly Notebook https://anapoly.co.uk/labs/

<reference> Anapoly Notebook is a website where we document our experiments in the day-to-day use of general-purpose AI. We reflect on what works (and what doesn’t), and share our thinking as it develops

<principle>There is a need to imbue students with values of benefit to society.

<principle>Students need to have an ethos of caring about how the human-AI relationship evolves.

<process>An LLM can be configured to support students to become wiser.

<concept>Anapoly Notebook will have an area in which philosophical ideas can be explored.

<process> Assume Anapoly and Marjon will run a collaborative project. This would be both a substantial piece of work in its own right and a vehicle for applied learning with AI. Purpose: to propose how values and ethos can shape human–AI interaction, using the creation of a substantive long-form essay as both a credible academic contribution and a live, documented case study in the effective, ethical, and collaborative use of AI tools.

<task>Clarify the distinction between “values” and “ethos”.Perplexity said

Clarifying the Distinction: “Values” vs. “Ethos”

Values

- Values are fundamental beliefs or principles that guide behavior, choices, and judgment.

- They represent what individuals or groups hold as important, worthwhile, or desirable.

- Values are relatively stable over time and can be personal (e.g., honesty, fairness), organisational (e.g., innovation, accountability), or societal (e.g., justice, freedom).

- They often serve as benchmarks for decision-making and evaluating right from wrong.

- Example: In the context of AI, a value might be transparency—the belief that AI systems should be open about their methods and limitations.

Ethos

- Ethos refers to the characteristic spirit, culture, or guiding beliefs of a person, group, organisation, or community.

- It is more than just a collection of values—it is the distinct atmosphere or prevailing philosophy that shapes identity and behavior.

- Ethos is expressed collectively, often through patterns of behavior, shared attitudes, customs, or traditions.

- Ethos provides context—how values are lived out, represented, and fostered within a group.

- Example: An organisation’s ethos might be described as caring and responsible—not just valuing transparency, but actively nurturing a culture where openness and accountability are the norm.

In Practice

- Values are what you believe; ethos is how those beliefs become an embodied, lived reality.

- For a student exploring AI use:

- Values might include integrity (not cheating) and curiosity (desire to learn).

- Ethos would be the flavour of the academic environment—whether it encourages deep, critical engagement with AI tools or merely compliance with rules.

Application to Human–AI Collaboration

- In collaborative AI projects (like between Anapoly and Marjon), values guide what is important (e.g., ethical use, social benefit), while ethos shapes how collaboration feels—whether it is imaginative, respectful, or driven by collective responsibility.

- Imbuing students with “values of benefit to society” means teaching what matters.

- Developing an “ethos of caring” ensures those values shape everyday attitudes and practices, including thoughtful, wise use of AI.

Summary:

- Values: The ideals we prioritise and uphold.

- Ethos: The collective character and lived expression of those ideals within a community.

Both are essential for shaping a thoughtful and socially responsible human–AI relationship.

Alec said

<concept>Anapoly Notebook will have an area acting as a home for the collaborative project referred to earlier in this chat. <task>Suggest five appropriate names / titles for that area

Perplexity said

Certainly! Here are five thoughtful name suggestions for the designated area within Anapoly Notebook that will serve as the home for the collaborative project between Anapoly and Marjon:

- The Collaboration Studio

- AI Ethics & Inquiry Hub

- The Marjon–Anapoly Commons

- The Human–AI Exploration Space

- The Values-in-Action Lab

Each of these titles aims to reflect the project’s spirit of partnership, hands-on experimentation, and the exploration of values and ethos in human–AI interaction. Let me know if you’d like suggestions in a particular style or with a different emphasis!

Alec said

<task>Distinguish “ethics” from “values” and “ethos”

Perplexity said

Ethics vs. Values vs. Ethos

Ethics

- Ethics refers to formal systems or frameworks that define what is right and wrong, usually within a specific context (such as professional, academic, or societal domains).

- Ethics are often codified—set out in rules, guidelines, or codes of conduct. These might be developed by professions, institutions, or societies to guide members’ behavior.

- Ethics involves systematic reflection and reasoning about moral questions—”What should we do?” or “What ought to be done?”

- Example: In higher education, an ethics code might prohibit plagiarism or mandate the fair use of AI in assessment.

Values

- Values are core beliefs or principles held by individuals, groups, or societies that inform preferences and shape behavior.

- Values are internal and subjective—they are about what people care about, such as honesty, creativity, or justice.

- They provide the personal or collective rationale for making choices, but are not necessarily made explicit or written down.

- Example: Valuing honesty might guide a student to acknowledge all sources (including AI tools) in their work.

Ethos

- Ethos is the distinctive spirit, culture, or character of a community, organisation, or environment.

- It emerges from how a group enacts its values—through shared attitudes, customs, and habits, often subconsciously.

- Ethos is experienced as the “atmosphere”—is a place supportive, rigorous, open?

- Example: An organisation may have an ethos of collaborative curiosity, where asking questions and learning together (including with AI) is encouraged.

In Summary

Term What is it? How is it expressed? Ethics Codified frameworks that define right and wrong in a context Codes, rules, guidelines, systematic reasoning Values Core beliefs or principles held by individuals or groups Choices, priorities, what people care about Ethos Characteristic spirit or culture of a group or organisation Customs, atmosphere, “the way things are done” - Ethics: The rules or principles defining “what’s right.”

- Values: What matters most to us.

- Ethos: The lived spirit, culture, or vibe when we put those values into practice together.

In the context of the Anapoly–Marjon collaboration, having an explicit ethics framework could guide the responsible use of AI, shared values would clarify what truly matters, and a strong ethos would shape a positive, principled learning environment.

Alec said

Is it correct to say that values are embodied in an ethical framework which is put into practice through an ethos?

Perplexity said

Yes — that’s a fair and useful way to put it, though we might nuance it slightly.

Your statement — “values are embodied in an ethical framework which is put into practice through an ethos” — captures the general relationship quite well, but here’s a clearer breakdown:

- Values are the underlying beliefs or principles — the “what matters most.”

- An ethical framework takes those values and organises them into a coherent system of reasoning and guidance for action. This is the step where values start becoming explicit rules, norms, or principles (“How should we act if we care about these values?”).

- Ethos is how those principles and values are lived out in the culture, atmosphere, and everyday behaviour of a group.

So in sequence:

Values → inform → Ethical Framework → expressed and reinforced through → Ethos.One refinement:

While an ethical framework often embodies values, it can also be influenced by laws, professional standards, or other external factors. Likewise, an ethos can reinforce values even without a formal ethical framework — in some communities, the culture comes first, and formal ethics follow.If you like, I can create a diagram showing this flow so it’s easy to visualise the relationship. Would you like me to do that?

Alec said

<you said>While an ethical framework often embodies values, it can also be influenced by laws, professional standards, or other external factors. Likewise, an ethos can reinforce values even without a formal ethical framework — in some communities, the culture comes first, and formal ethics follow. <principle>Where culture comes before formal ethics, the culture has an implicit, informal ethical framework <task>Critique that principle

Perplexity said

Your principle — “Where culture comes before formal ethics, the culture has an implicit, informal ethical framework” — is reasonable and often true, but it could benefit from some nuance. Here’s a critique:

Strengths of the Principle

- Recognises ethics beyond codification

- It correctly points out that shared values and norms can exist and guide behaviour even when they’re not written down or formally systematised.

- Many communities rely on “the way things are done” — unwritten rules that still have moral weight.

- Acknowledges cultural primacy

- In some organisations, the ethos and traditions precede any explicit ethics policy, and these traditions act as a de facto framework for behaviour.

- Highlights implicit moral guidance

- Even without a named “code of ethics,” behaviours are often policed indirectly through social approval, disapproval, and cultural expectations.

Possible Limitations

- Implied ethical coherence may be overstated

- A culture can have traditions and norms without them forming a coherent “framework.”

- Some cultures are a blend of contradictory influences, where guidance is situational rather than systematic.

- Risk of bias and exclusion

- An informal framework may simply reflect the dominant group’s values, which may be discriminatory or self-serving rather than universally ethical.

- Formal ethics often emerge to challenge such biases, not just to document existing norms.

- Vulnerability to change or erosion

- Without codification, cultural norms can shift quickly, be misinterpreted, or be lost when leadership or membership changes.

- This fragility means informal ethical systems may lack consistency over time.

- Possible absence of moral reflection

- A culture might be rich in social customs but poor in explicit moral reasoning.

- Informal guidance can drift into habit rather than being grounded in ethically reasoned principles.

Refined version of the principle

You might reframe it as:

“Where culture precedes formal ethics, it usually operates with implicit moral norms, which may function like an informal ethical framework — though not always coherently, inclusively, or consciously.”

This keeps your core insight but acknowledges that informality can mean inconsistency, unexamined biases, and gaps in moral reasoning.

If you’d like, I can map out a diagram showing how informal cultural norms, values, ethics, and ethos relate and evolve over time — which might help Anapoly and Marjon visualise these interdependencies. Would you like me to do that?

Alec said

<task>Tie these ideas together into a explanation which distinguishes values, ethics and ethos whilst recognising culture.

Perplexity said

Alright — let’s weave these strands into a single, coherent explanation that distinguishes values, ethics, and ethos, while recognising the central role of culture.

How Values, Ethics, Ethos, and Culture Connect

At the foundation are values — the beliefs and principles about what is important, desirable, or right. They answer the question: What matters most to us?

- Values may be personal (honesty, curiosity), organisational (innovation, accountability), or societal (justice, equality).

- They are the moral “raw material” from which everything else flows.

Culture is the lived environment shaped by shared history, relationships, language, and traditions. It is how people actually live and work together over time.

- Culture naturally expresses shared values but also shapes them — reinforcing some, downplaying others.

- Culture develops whether or not there is any formal ethical guidance.

- When culture comes first, it carries implicit norms — unwritten but widely understood expectations of behaviour. These can act like an informal ethical framework, but they are not always coherent, inclusive, or consciously examined.

Ethics is where values become explicit, systematic guidance for behaviour.

- An ethical framework takes values (and sometimes external influences like laws and professional standards) and turns them into principles, rules, or reasoning methods.

- Ethics asks: Given our values, how should we act, and why?

- Formal ethics codifies expectations; informal ethics may be embedded in culture without being written down.

Ethos refers to the characteristic spirit or “feel” of a group — the lived expression of values within a culture.

- While ethics defines the rules and reasoning, ethos captures the atmosphere and collective character that emerges when values are embodied in daily life.

- Ethos is tangible in tone, traditions, and shared habits: e.g., a research lab where “integrity and curiosity” aren’t just rules, but felt in how people question, listen, and share.

Putting it Together

- Values are the starting point — our moral compass.

- Ethics is a reasoned map that guides how we apply those values in real situations.

- Culture is the social terrain where these values and ethics live, evolve, and sometimes clash.

- Ethos is the vibe or spirit of that culture when values are actively lived out.

Example (Anapoly–Marjon context):

- Values: Transparency, social responsibility, curiosity.

- Ethics: A joint AI-use policy ensuring clear attribution, fairness, and student agency.

- Culture: A collaborative learning environment where open discussion and experimentation are the norm.

- Ethos: A spirit of “caring curiosity” about AI — you feel it in workshops, discussions, and documentation.

If you’d like, I can express this as a visual model where values feed into ethics, which interacts with culture to shape ethos — and culture also loops back to influence values. This might make the relationship clearer and more memorable.

Do you want me to create that diagram?

Alec said

Yes, let;s see what it looks like

Perplexity said

Alright — here’s a visual model that brings together values, ethics, culture, and ethos, showing how they relate and influence one another.

text

┌─────────────┐ │ VALUES │ │ "What │ │ matters │ │ most?" │ └─────┬───────┘ │ informs ▼ ┌─────────────┐ │ ETHICS │ │ "How should │ │ we act?" │ │ (rules, │ │ principles) │ └─────┬───────┘ │ applied within ▼ ┌─────────────┐ │ CULTURE │ │ Shared │ │ norms, │ │ traditions, │ │ behaviours │ └─────┬───────┘ │ lived as ▼ ┌─────────────┐ │ ETHOS │ │ Spirit / │ │ atmosphere │ │ of the group│ └─────────────┘

Flow & Feedback Loops

- Values → Ethics: Values inspire the creation of an ethical framework (formal or informal).

- Ethics → Culture: Ethical guidance shapes everyday behaviour and group norms.

- Culture → Ethos: The recurring lived practice produces a shared “feel” or spirit.

- Culture ↔ Values: Over time, cultural experience reinforces or evolves values.

- Ethos ↔ Culture: A group’s spirit motivates how culture operates day-to-day.

💡 Anapoly–Marjon Example

- Values: Curiosity, transparency, care for society.

- Ethics: Agreed rules for fair, open AI use in student projects.

- Culture: Active experimentation, open feedback, respect for diverse perspectives.

- Ethos: A spirit of caring curiosity—you sense it in every discussion and collaboration.

If you’d like, I can also adapt this diagram into a circular model to show the cyclical nature of influence — which might visually suit the idea that culture itself can pre-date formal ethics and reshape values.

Do you want me to prepare that version next?

Alec said

Yes, let’s have a look at that

Perplexity said

Got it — here’s the circular version of the model so you can see how values, ethics, culture, and ethos influence one another in an ongoing cycle rather than a one-way chain.

text

┌─────────────┐ │ VALUES │ │ "What │ │ matters │ │ most?" │ └─────▲───────┘ │ shapes │ reinforces │ ▼ ┌─────────────┐ │ ETHICS │ │ "How should │ │ we act?" │ │ Rules & │ │ principles │ └─────▲───────┘ │ guides │ ▼ ┌─────────────┐ │ CULTURE │ │ Shared norms│ │ & practices │ └─────▲───────┘ │ lived as ▼ ┌─────────────┐ │ ETHOS │ │ Spirit / │ │ atmosphere │ └─────┴───────┘ ▲ │ influences └────────────── back to CULTURE

Why this matters

- It’s cyclical, not linear — values lead to ethics; ethics shapes culture; culture generates ethos; ethos reinforces culture; and over time, culture can also reshape values.

- Culture can start the loop — in some communities, shared norms and traditions evolve before any formal ethics are written, creating an implicit moral framework.

- Ethos keeps it alive — without the “feel” of a caring, principled community, ethics risk becoming hollow rules.

💡 Anapoly–Marjon example in this loop

- Values: Curiosity, transparency, social benefit.

- Ethics: Joint principles for fair and open AI use.

- Culture: Workshops, experiments, and discussions that normalise those principles.

- Ethos: A lived spirit of caring curiosity that motivates participation and trust.

…and this spirit feeds back into evolving the shared culture and refining the values.

If you like, I can turn this into a polished infographic with labels and arrows that visually emphasise the feedback loops.

Do you want me to prepare that visual?Alec said

Yes please, a polished infographic would be useful.

Perplexity said